Nvidia’s eye-tracking system blurs the lines for more immersive VR environments

The human eye can only focus on one surprisingly small area at a time, while our peripheral vision gives us the general gist of what else is nearby. That means a lot of processing power that goes into fully rendering virtual reality environments in focus is wasted when you’re only really taking in a small area of the screen at any given time. Nvidia has developed a rendering technique that allows that wasted processing power to be redirected to allow developers to create more immersive VR environments.

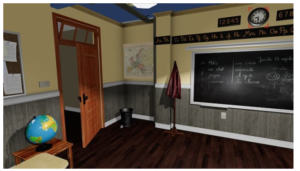

The technique, called foveated rendering, gets its name from the fovea centralis, a small pit in the retina that packs in far more cones than other areas, granting us the ability to see in very fine detail when looking directly at something. Nvidia’s new algorithm takes some of the strain off the software by only fully rendering the area around the point a user is currently looking at, and blurring the rest of the scene gradually from there outwards.

So how does it know where you’re looking? With the help of a prototype eye-tracking headset from SMI. That system has been out there for a few years, so Nvidia isn’t the first to create a foveated rendering system with it, but the team is attempting to fix some of the issues found in previous versions.

To do so, the team studied what details people pick up on in their peripheral vision, such as color, contrast, edges and motion. From the data gathered, the team developed an algorithm to make the scene look more natural and the peripheral blurring effect as unnoticeable as possible.

Simply blurring the outer edges loses some of the contrast and can create a kind of “tunnel vision” effect, and if the foveation is too extreme, the blurred areas can appear to flicker, distracting the user and defeating the purpose of the system. To combat this, the Nvidia team made sure to preserve the contrast of the blurred areas, and as a result, users reported being comfortable with twice as much blurring as before.

“That’s a big deal,” says Anjul Patney, “because, with VR, the required framerates and resolutions are increasing faster than ever.”

The more the foveated rendering system can comfortably blur, the more resources can be redirected to making that little in-focus spot pop.

http://www.gizmag.com/nvidia-eyetracking-vr/44493/